What is Q2?

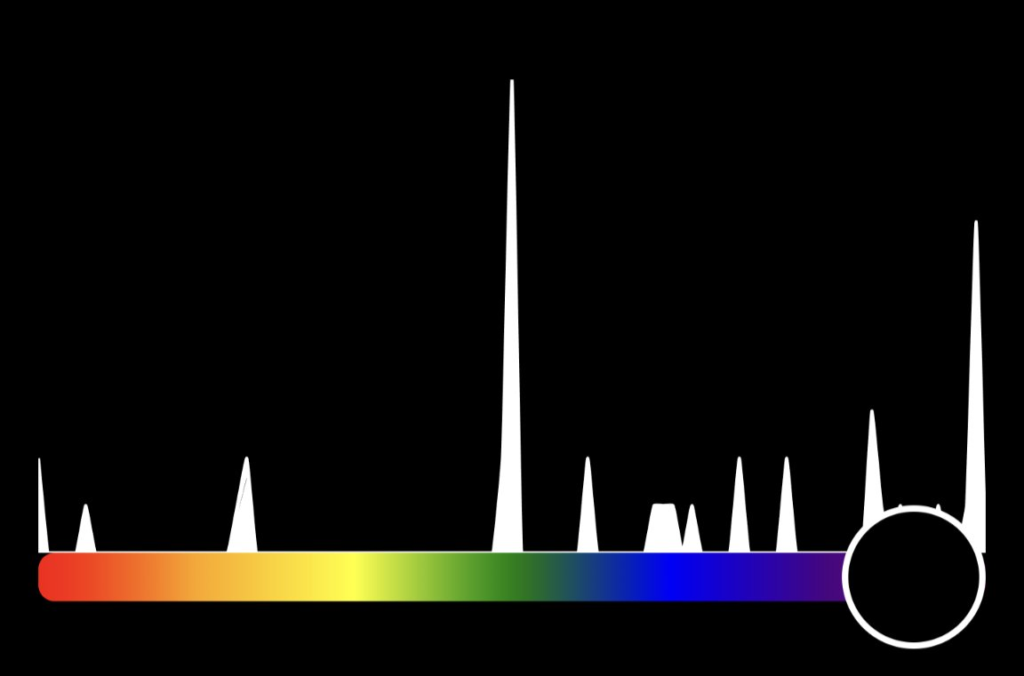

A: Q2 takes the temperature of opinions.

From Worst to Best, feelings are sorted by color.

It’s a mood ring for the internet.

Derivatives will be pervasive, early adopter value is highest now.

We can do it ourselves, but if you feed the swans, you will be rewarded.

Minimum bid:

$50K

![]() ⊆ Physix

⊆ Physix

0Ω